Artificial Intelligence (AI) represents a major technological advancement with profound implications in all aspects of modern society. This phenomenon has accelerated with the emergence of generative AIs such as Mistral, ChatGPT, Gemini, etc.

From healthcare to finance, including industry and public services, AI promises considerable benefits.

However, its deployment also raises ethical, social, and legal concerns, leading governments to establish regulations to govern its use.

The European Union approved new legislation on Artificial Intelligence (AI) in April 2024: the world's first comprehensive law on AI.

AI regulation is currently expanding globally, with diverse approaches depending on legal contexts, priorities (fundamental rights, safety, innovation), and institutional maturity. For instance, in the United States, there are approximately a hundred laws being adopted on various issues (algorithmic discrimination, deepfakes, consumer protection, etc.). States like Colorado and Utah have already enacted notable laws.

What is the AI Act?

The AI Act (Regulation (EU) 2024/1689), is a legislation designed to regulate and promote the development and commercialization of artificial intelligence systems within the European Union.

Launched by the European Commission in April 2021, the AI Act came into effect on July 12, 2024, after three years of negotiations.

This initiative aims to foster the development of responsible AI, ensuring fundamental rights, safety, and ethical principles while encouraging and strengthening AI investment and innovation throughout the EU.

Definition of Artificial Intelligence

Artificial Intelligence (AI) is used to automate tasks, analyze data, make decisions, customize user experiences, and create autonomous systems in various fields such as health, finance, manufacturing, and many others.

The development of artificial intelligence involves the design, training, and optimization of algorithms and computer models to enable a system to simulate human cognitive processes or perform specific tasks autonomously.

The artificial intelligence system is developed using various learning techniques, the main ones being:

- Supervised learning: in this method, the AI model is trained on a labeled dataset, where each data point is associated with a desired label or output.

- Unsupervised learning: the AI model is exposed to unlabeled data and seeks to discover intrinsic structures or patterns within that data.

- Reinforcement learning: an agent interacts with a dynamic environment and receives rewards or penalties based on the actions it takes.

AI systems can learn and adapt from data, whereas traditional tools are limited to executing predefined instructions.

Artificial intelligence is not merely about executing commands: it involves the ability to reason and adapt based on experience.

**To understand the difference between an AI model and an AI system, click here.

Why is AI regulation necessary?

The AI Regulation aims to build trust in artificial intelligence technologies. While some AI systems present low risks and help address various societal challenges, others pose real risks.

Thus, the requirements of the AI Act focus on:

- Targeting specific risks associated with AI (errors, cognitive biases, discrimination, or impacts on data protection)

- Banning AI practices that present unacceptable risks

- Defining clear criteria for AI systems used in these applications

- Imposing specific obligations on users and providers of these applications

- Requiring compliance assessments before deploying or commercializing an AI system

- Monitoring rule enforcement after the commercialization of an AI system

- Establishing a governance structure at both European and national levels.

Who is affected by the AI Act?

The AI Act applies only to systems and use cases governed by EU law. It regulates the use of AI systems within the EU, whether developed within the Union or imported from third countries.

Stakeholders correspond to all actors involved in the lifecycle of an AI system, namely providers, deployers, importers, and distributors.

However, there are some notable exclusions from the scope of the law(Article 2), such as:

- Activities for military, defense, or national security purposes;

- AI systems developed and deployed exclusively for scientific research and development purposes;

- The use of AI systems by individuals for strictly personal and non-professional activities.

- Open-source models under certain conditions.

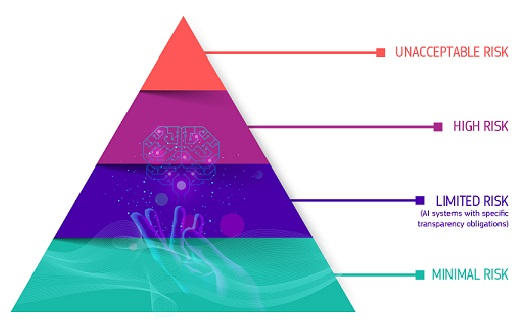

Risk levels

The approach adopted by the AI Act is risk-based. The regulatory framework establishes four categories of risk for artificial intelligence systems. The aforementioned stakeholders must ensure compliance with the AI Act requirements according to the risk level.

This pertains to application-specific systems, for which risk is assessed based on their concrete use case (e.g., human resources), and not general-purpose AI models which are treated differently due to their ability to perform distinct, sometimes unpredictable tasks.

Unacceptable risks:

Unacceptable risks:

AI systems and models that present an unacceptable risk cannot be marketed or used for export within the European Union. These include AI systems deemed a clear threat to safety, livelihoods, and rights of individuals, from government social scoring to toys using voice assistance that encourages dangerous behaviors.

Examples: social scoring, widespread biometric identification, deepfakes, content manipulation, etc.)

High-risk systems:

AI systems judged to be high-risk form the core of the AI Act's requirements. They can be divided into two categories.

The first corresponds to systems that are integrated into products themselves covered by existing sectoral safety legislation (e.g., the toy safety directive). A compliance assessment by a notified third party will be mandatory for these systems.

The second corresponds to systems encompassed within the domains listed in Annex III of the AI Act, such as:

Critical infrastructures such as transportation, which could endanger the life and health of citizens.

Education and vocational training.

Employment, workforce management, and access to self-employment — for example, the use of CV-sorting software in recruitment processes.

Example: Emotion analysis in the workplace – The use of AI to analyse emotions or to classify employees biometrically is prohibited due to the risks to privacy and discrimination.

Essential public and private services.

Example: Social scoring for commercial purposes – AI cannot be used to evaluate or rank individuals based on social behaviour or personal characteristics leading to unfair or discriminatory treatment.

Manipulative AI systems – Any AI exploiting subliminal techniques to significantly influence a person’s behaviour, with a risk of physical or psychological harm. Companies must avoid deploying AI systems that manipulate consumers in harmful ways.

High-risk systems smust meet stringent obligations before being allowed on the market, including:

- Implementing adequate risk assessment and mitigation methods.

- Using high-quality datasets to minimize risks and avoid discriminatory outcomes.

- Keeping a log registry to ensure traceability of outcomes.

- Creating detailed documentation providing all necessary information on the system and its purpose, enabling authorities to assess its compliance.

- Providing clear and appropriate information for users.

- Establishing human oversight measures to minimize risks.

- Maintaining a high level of robustness, safety, and accuracy.

All remote biometric identification systems are considered high risk and thus subject to stringent requirements. The use of such systems in public spaces for law enforcement purposes is fundamentally prohibited.

Exceptions are allowed under specific and strictly regulated circumstances, such as preventing an imminent terrorist threat.

Note: An AI system that was not initially classified as "high risk" may later acquire this status in several situations:

Substantial modification of its functionalities or mode of operation: in this case, the entity adapting or redeploying the system is considered a provider under the AI Act, with all associated obligations;

Evolution of processed data, altering the nature or impact of the system;

Reuse in a high-risk use case, as defined in Annex III of the AI Act.

Limited/Moderate Risks:

Limited or moderate risk refers to dangers associated with the lack of transparency in the use of artificial intelligence.

The AI legislation introduces specific transparency obligations to ensure that individuals are informed when necessary, thus enhancing trust.

Example: When interacting with AI systems like chatbots, individuals must be informed that they are communicating with a machine, allowing them to make an informed decision about whether to continue or withdraw from their interaction.

Providers will thus have to ensure that AI-generated content is identifiable. Additionally, texts generated by AI and published to inform the public on matters of general interest must be clearly indicated as generated by artificial intelligence.

This requirement also applies to audio and video content that may constitute deepfakes.

Minimal or no risks:

The law allows for the free use of AI presenting minimal risk. Most AI systems currently in use in the EU fall into this category. There are no specific obligations, but adherence to codes of conduct is encouraged.

Example: Video game bots, anti-spam filters, etc.

What are the penalties for non-compliance with the AI Act?

Significant penalties, similar to those under the GDPR for non-compliance, are foreseen for violations of the AI Act.

Non-compliance with the regulation may result in sanctions, including administrative fines. The maximum amounts vary depending on the severity of the infringement and the size of the company.

In cases of non-compliance with rules concerning unacceptable risks, fines may reach up to €30 million or 7% of annual global turnover (whichever is higher). For other violations of the AI Act, fines can amount to €20 million or 5% of annual global turnover.

The regulation also provides for fines in cases of failure to cooperate with national supervisory authorities in the EU, which may reach €10 million or 2% of annual global turnover.

This sanction regime will take effect on 2 August 2026, although the prohibitions under Article 5 will apply earlier, from 2 February 2025.

From that date, companies breaching these prohibitions may face civil, administrative, or criminal proceedings under other EU laws, such as product liability rules or the GDPR in cases of unlawful processing of personal data.

Natural and legal persons may lodge a complaint with the relevant market surveillance authority (Article 85) and have the right to an explanation regarding decisions made by AI systems (Article 86).

Phased implementation timeline

- February 2, 2025: Implementation of general provisions and the chapter on prohibited practices.

- August 2, 2025: Implementation of the chapter concerning notified authorities and notified bodies, the chapter on general-purpose AI, the governance chapter, and the sanctions chapter.

- August 2, 2026: General applicability of the regulation.

- August 2, 2027: Application of specific obligations related to high-risk AI systems, safety components of products (Article 6 §1).

- August 2, 2030: Providers and deployers of high-risk AI systems intended for use by public authorities must comply with the requirements and obligations of the regulation.

Governance

The AI Act relies on a two-tier institutional architecture – national and European – to ensure a harmonized application of the regulations across the Union.

1. At the national level: supervisory authorities

Each member state must designate national competent authorities responsible for:

- Monitoring the market and controlling AI systems,

- Verifying compliance assessments,

- Designating and supervising notified bodies authorized to conduct audits,

- Enforcing sanctions in cases of non-compliance.

The designation of the national competent authority is expected by August 2025. This authority will need to closely collaborate with the European AI Office to ensure coherence of implementation.

2. At the European level: centralized management

Within the European Commission, the EU AI Office serves as the main institution for oversight, especially regarding general-purpose AI models.

It relies on two advisory bodies:

The AI Board, which brings together member states, civil society, economic actors, and academics, to inform regulatory directions and integrate a diversity of viewpoints.

The Scientific Advisory Panel, composed of independent experts, tasked with identifying systemic risks, issuing technical recommendations, and contributing to the definition of classification criteria for models.

3. Objective

This system aims to ensure rigorous, transparent, and scientifically-based governance to support businesses and citizens within an ever-evolving regulatory framework.

Meeting the requirements of the AI Act with Dastra

Our Dastra software will help you easily establish compliance with the AI Act regulations. Dastra now includes a comprehensive register of AI systems with integrated risk analysis, asset mapping, data, and relevant AI models.